Automation - Perl / Python - Processing Workflow

Introduction

I started this project initially because I wanted to scrape specific attributes and data from one of the distributed systems I work with. After completing my initial tool for extracting the data that I wanted to assess I seen the potential for the application to be of use for introducing some configuration management and automation.

During my investigation for this project, I wanted to see if I could further optimise the process by allowing tasks to be run in parallel by containerising the application and letting it run in a docker environment. Unfortunately, time constraints prevented this part of the project from being realised although I was able to successfully containerise a large portion of the application, this included creating the initial container, installing the application into the container, configuring the database to work within the container environment, configuring the automation scripts to run within the container and for the container to be kept in a repository.

The Brief

- Bulk Import of P2/11 data files to the Iris application

- Batch process the imported data

- Automatically Create P1/11 comparison plots

- Report on the status of each file that has been processed

- Report on the status of all the lines that have been processed

- The tool should be able to run in all appropriate environments, including cloud-hosted SaaS platform

The Input parameters

- Iris Application

- P2/11 files

- P1/11 files

The Requirements

- I need to register P211 files to instruct the automation application what files to process

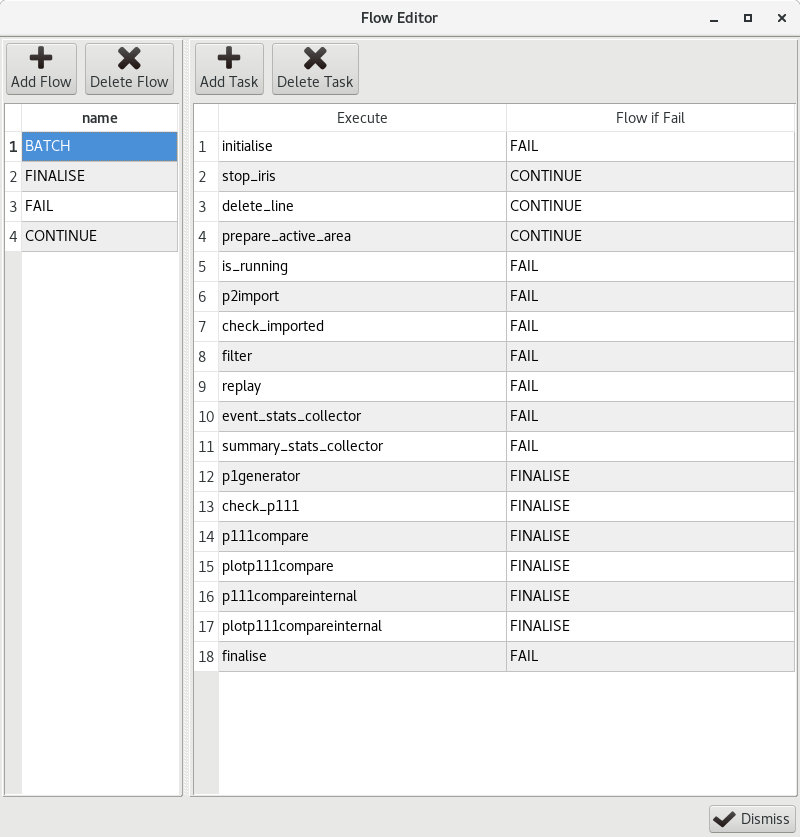

- I need to be able to define the processing tasks or workflow the automation should follow during automation

- The following automatic steps are required to facilitate the automation task

- Starting / Stopping the Iris application

- Updating the version of Iris in use

- Import the P211 files into Iris

- Filter the imported data

- Adjust the imported data

- Generate P1/11 file format

- Compare P1/11 file with a client-supplied deliverable

- Generate processing statistics

- Log applications performance

- The automation must be able to run in a headless environment - for the case when docker might be required for scaling the batch processing through containers

- The user will be able to browse the results of the automation task

- The user will be able to monitor the progress of the automation task as it runs

The Implementation

To facilitate this task, a series of Perl modules were created to provide the areas of functional blocks required this initially consisted of:

- Structure.pm - Small PostgreSQL database structure defined to store the application-specific parameters

- Logging.pm - Provides basic error reporting for the application

- Config.pm - Provides an interface between the Perl application and the Iris applications configuration

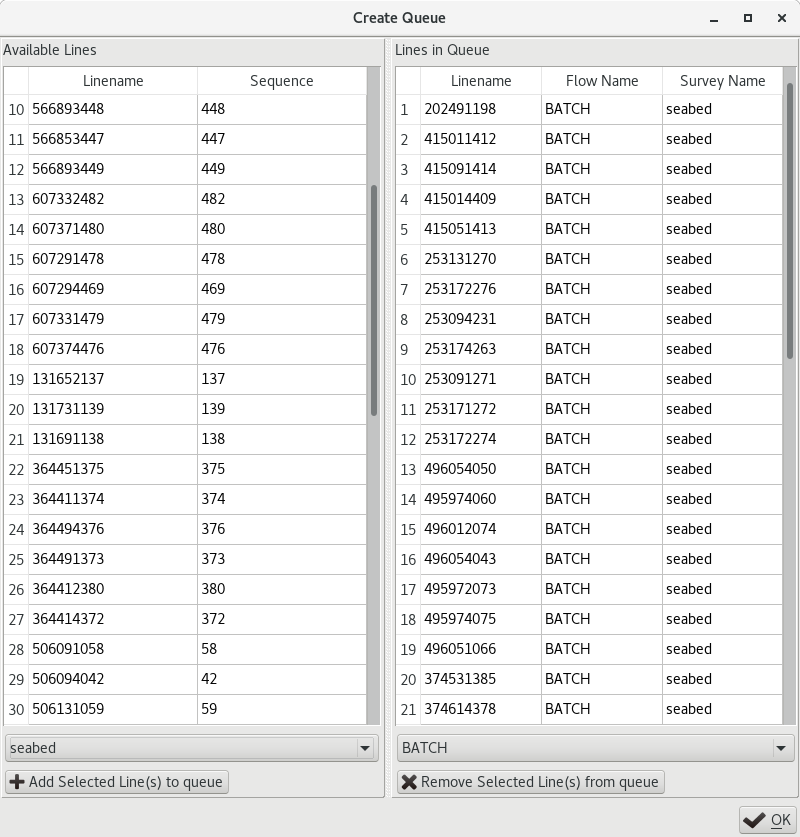

- Queue.pm - Allows the P211 files to be imported to be added to the automation system

- Tasks.pm - Defines a series of methods for handling the process management

- Events.pm - Collects and collates the statistics from the Iris application

In order to execute the automation, a small script was created that imported the relevant Perl modules and triggered the correct methods for the batch processing task.

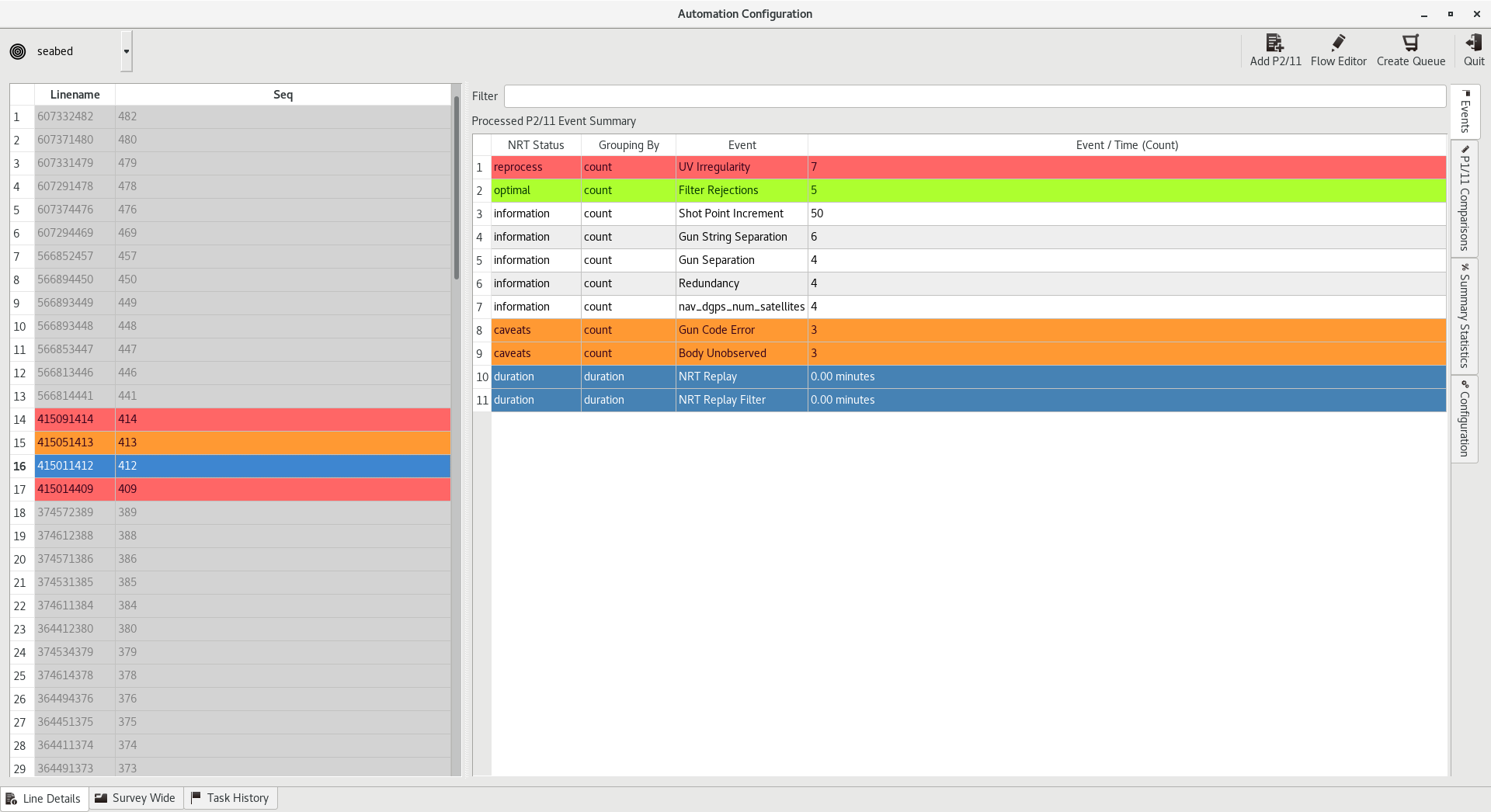

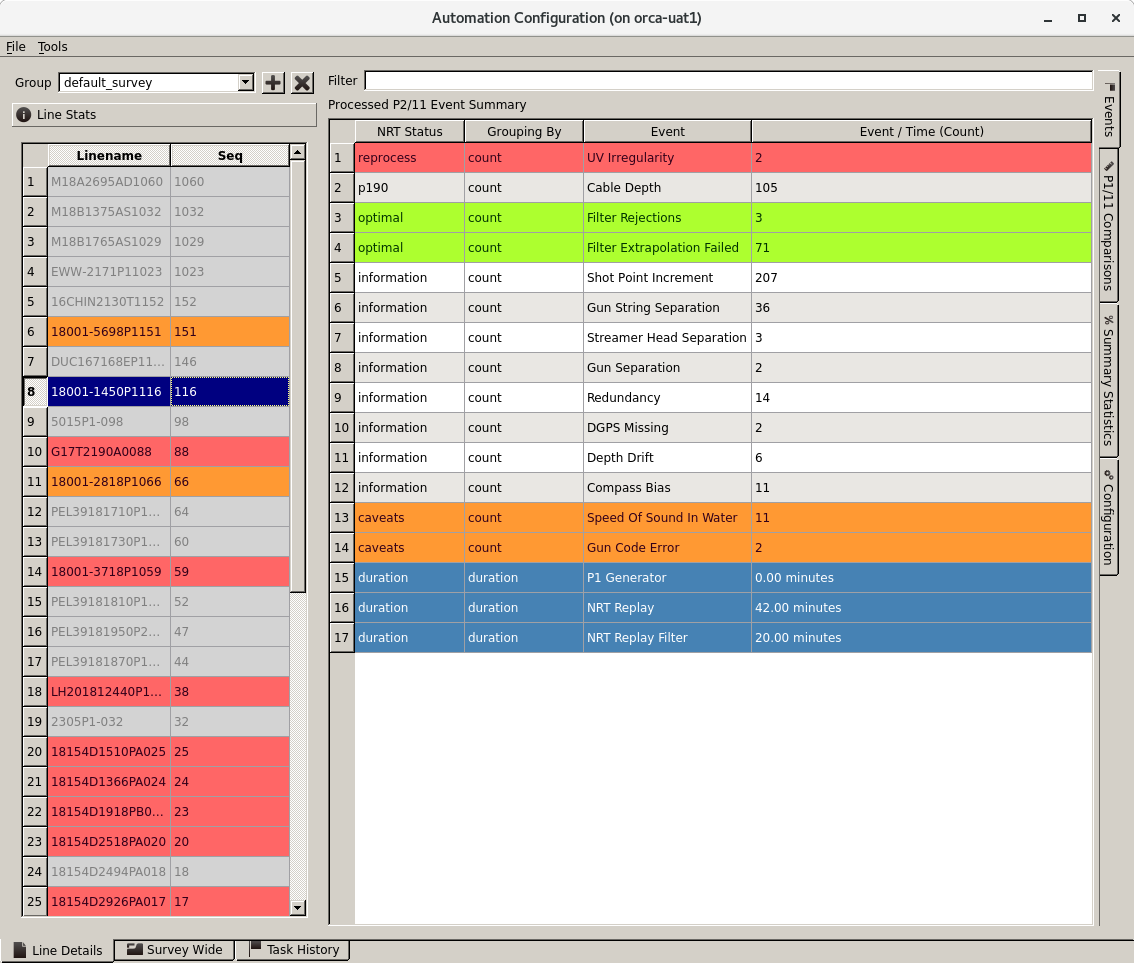

In order to view the results, monitor the progress, and configure the processing workflow and line queue a python application was created making use of the PyQT modules to represent the information in a desktop application.

This application offered a line by line view (for each imported file), a survey wide view - for all files in each survey, and a task view to show the progress of the workflows.

Some additional configuration tools were add in to facilitate the configuration of a processing flow, and line queue so that a batch processing task could be configured and then triggered at a later stage.

The GUI application and the Perl application were separate processes so the GUI application was not required for the automation function to be executed.

In Summary

The application itself has been successfully deployed to help the development team reach their next milestone and by re-running the automatic tasks regularly it is possible to ensure the results and behaviour of the application are as expected.

The application was successfully deployed to AWS infrastructure and used as part of a SaaS solution to present progress of the development effort to one of our clients.

- Perl

- Python

- PyQT

- CRON

- Data Analysis

- Data Parsing

- PostgreSQL

- UI Design

- Docker

- AWS EC2

Image

Image

Image

Image